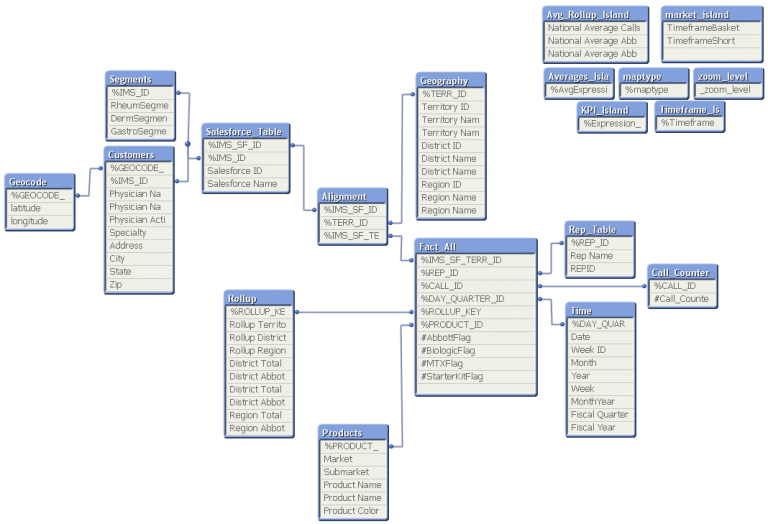

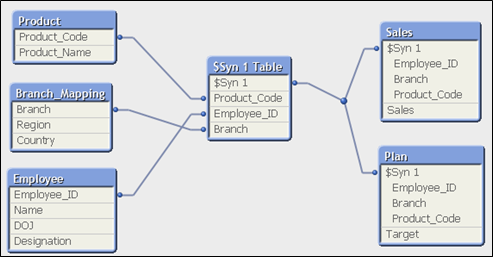

This is a question I am always asked when designing and releasing QlikView applications to various user groups. As I’m sure you know, QlikView allows users to analyze data quickly due to the associative in-memory technology design. Unique entries are only stored once in-memory; other entries are pointers to the parent data. Therefore, memory and CPU sizing is very important for the end-user experience. Performance is directly connected to the hardware QlikView is running on.

The main performance factors in QlikView are data model complexity, amount of unique data, UI design and concurrent users. All of these factors play into scalability, which is pretty linear when discussing QlikView.

There is a basic formula that can be used to determine the amount of memory that is needed as a starting point.

The formula:

For every QVW

((Size of Disk) * 4) + (Size of Disk * 4) * .05 * (Number of Concurrent Users)

Here’s an example:

Source Data size = 50GB

Compression Ratio = 90%

File Size = 4 for multiplier

User Ratio = 5%

Concurrent Users = 50

*Note that concurrent users is NOT the total number of supported users

The size of disk is (50GB * (1-0.9)) = 5GB

RAM = (5GB * 4) + (5GB * 4) * 0.05 * 50 = 70GB for 50 concurrent users

Given the formula and these specs, the suggested minimum would be 70GB HD at the start of an implementation.

Under normal QlikView deployments, word eventually gets out about return-on-investment and more departments will request to have their own QlikView deployments. Additional departments = more diverse sets of data and increase of demand on the server.

It is important to know of any increases in demand, as you need to make sure that the size of server is still appropriate. One tool that is very helpful for performance monitoring is the QVS System Monitor. Please follow this link for more details about the QVS System Monitor app and how to deploy: //community.qlik.com/docs/DOC-6699